Excerpted from the report “ICFA SCIC Report: Networking for High Energy Physics” by the International Committee for Future Accelerators (ICFA),

Standing Committee on Inter-Regional Connectivity (SCIC), Chair: Harvey Newman, Caltech

The key role of networks has been brought sharply into focus as a result of the worldwide-distributed grid computing model adopted by the four LHC experiments,

as a necessary response to the unprecedented data volumes and computational needs of the physics program. As we approach the era of LHC physics, the experiments

are developing the tools and methods needed to distribute, process, access and cooperatively analyze datasets with volumes of tens to hundreds of Terabytes of

simulated data now, rising to many Petabytes of real and simulated data during the first years of LHC operation.

The scale of the required networks has been set by the distribution of data from the Tier0 at CERN to 11 Tier1 centers, as well as the needs for distribution

to more than 100 Tier2 centers located at sites throughout the world where more than 40% of the computing and storage resources will be located, and where the

majority of the data analysis as well as the production of simulated data are foreseen to take place. This is complemented by hundreds of computing clusters (

Tier3s) serving individual physics groups, where there will also be a demand for (1 to a few) Terabyte-scale datasets once the LHC program is underway.

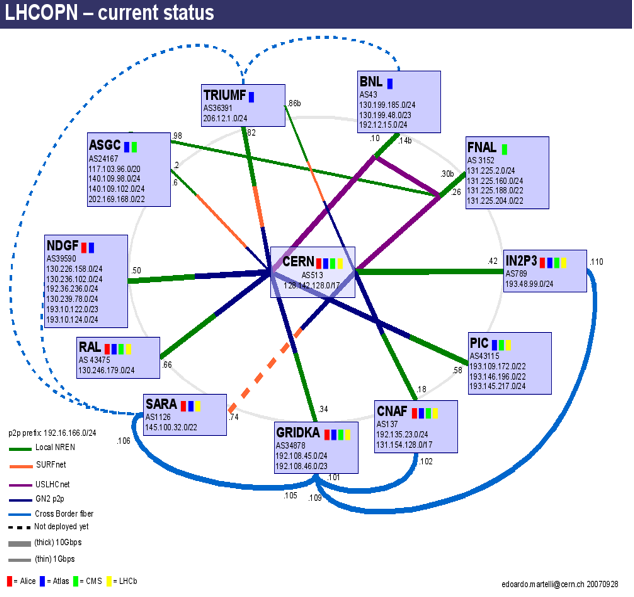

In order to respond to the highest priority needs of the experiments, for data distribution from the CERN Tier0 to the Tier1s as well as data exchange among

the Tier1s, the LHC Optical Private Network (OPN) has been formed. As a “private network” where only designated flows between specified source and destination

addresses are allowed, the OPN will serve to guarantee adequate, secure connectivity to and among the Tier1s, as long as the links continue to evolve in future

years to meet the bandwidth needs. The present status of the OPN is shown in Figure 1. Each of the Tier1s is connected to CERN at a minimum bandwidth of 10 Gbps,

with some Tier1s (such as BNL and Fermilab which connect to CERN over ESnet and US LHCNet; and SARA in the Netherlands) connecting using multiple 10 Gbps links.

The initial configuration of the OPN was largely a “star” network centered on CERN, and this is being supplemented by an increasing number of additional links

among the Tier1s (shown at the periphery of the figure) to provide backup paths, in order to ensure that the OPN can provide round-the-clock, nonstop operation

as required. As indicated (by the dashed lines) in the figure, additional links are planned to provide backup paths for the centers in Canada, Taiwan, and the

Nordic countries.

In addition to the Tier0 and Tier1 connectivity shown above, the Tier2s also have very important roles to play in the overall LHC Computing Model, providing

much of the resources as well as being focal points for analysis. As part of this role, each Tier2 requires connectivity to their corresponding Tier1 (in the

ATLAS version of the Model) or to the ensemble of Tier1s (in the CMS version of the Model), and the ability of Tier2s to get at the datasets needed implies

substantial data flow within as well as beyond the limits of the OPN, across GÉANT2, Internet2, NLR, and other national research and education networks (NRENs).

The Tier2 connectivity requirements have been variously estimated in the range from 1 to 10 Gbps, depending on the availability and affordability of bandwidth

in each region. The Tier2s in the US, for example, either already have or are planning 10 Gbps connections before LHC startup.

URL:

http://monalisa.caltech.edu:8080/Slides/Public/SCICReports2008 or

http://monalisa.cern.ch:8080/Slides/Public/SCICReports2008

Figure 1: The LHC Optical Private Network (OPN) Status (September 2007)

Figure 1: The LHC Optical Private Network (OPN) Status (September 2007)